Creativity is no small task. We do our best to cultivate and cherish the imagination of young children, and struggle to maintain those senses as we get older. But what exactly is creativity? Can its rules be written down? Is there an algorithm for it? Can we teach a computer to be creative? As the maturing field of artificial intelligence blossoms, this philosophical question just might be answered. To be clear, artificial intelligence doesn’t mean that Terminators roam the streets. In a more narrow sense, it is the science, engineering, and arguably the art, of teaching computers how to learn patterns.

Most researchers attempt to solve this problem by trying to copy how a human learns and translating that into computer code. Generally, a person learns by seeing many examples of something. A baby might see many cars and eventually learn to associate a specific pattern of wheels and windows as a car. In code, this means building a computer model that takes in an image and can then recognize patterns of pixels as wheels and windows, and classify that pattern as “car”. While we may only need to see a few examples of something to learn how to classify it, an AI, or artificial intelligence, may need thousands, or even hundreds of thousands, to accurately identify the object. This need for massive amounts of learning data, called “training data” in the AI field, is one of the biggest bottlenecks to building these computer models. To solve this problem, humans got creative.

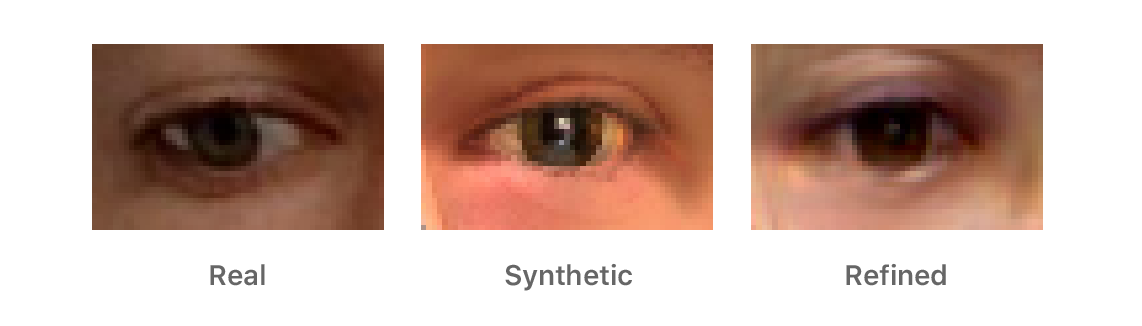

A group of AI researchers from Apple Computers (yes the ones that make your iPhone) created a method for computers to generate their own training data. Their task was to build software that could classify which direction a person was looking, but they required more data for the computer to be able to learn. Their solution used a technique called General Adversarial Networks, or GANs; the term adversarial comes from the fact that two AI’s are competing against each other to create the image data.

The original investors of GANs borrowed an idea from the old “cops and robbers” thought experiment in Economics and Game Theory. It goes something like this: our thief’s job is to print fake money, and the cop checks the money to see if it is real or not. In the beginning, the cop doesn’t have very good ways to tell real money from fake money, so he let’s most things pass. The thief just needs to print out pictures from his home printer and he is good. But as the cop gets better technology for inspecting the money, the thief must get better machines to print fake money. As the technology on either side gets better and better, the thief is able to print off money that looks incredibly real to most people, except the cop who has state-of-the-art scanners that can tell the difference. This is exactly how General Adversarial Networks work in AI.

There is one AI network called the Generator. Its job is simply to generate images. In the very beginning of the learning process, the Generator is rather weak and creates images that are pure noise, like the static on an old-school TV. The other network is a Decider, its job is to decide if the images from the Generator are real. The Decider starts off easy, and lets some bad noisy images through. The Generator becomes a little better at creating the synthetic images, and the Decider gets better at deciphering the fakes. After many iterations of creating and deciding, the overall network becomes incredibly good at creating images. In the case of the Apple researchers, the network can create photo-realistic eyes that look in various directions. Whether this means we have taught the computer ‘creativity’ depends on the interpretation of ‘creativity’, but the system has definitely learned how to create.

Image Source: https://machinelearning.apple.com/images/journals/gan/color_gaze.png

Apple can now use its Generator Network to create many examples of these looking eyes, which it can in turn train to create, say, an App that allows emojis to copy your face. Another application would be if car companies used this technology to track if you are dozing off at the wheel and alert you for safety.

Apple’s research paper highlighted two important trends in AI. First, engineers developed a method to overcome the lack of data by actually synthesizing the data necessary to allow computers to learn. Second, research traditionally stems from universities or places like the NIH or NASA, but AI is the exception. In AI, a lot of research comes from companies, like Apple, Google, Microsoft, Facebook, etc, which increases funding for these essential developments. As AI matures with corporate funding, we must remember how the definitions of more human aspects of life, like creativity, thinking, and reasoning, evolve.