One of the biggest topics in the news over the past 2 years has been advancements in artificial intelligence or AI. When many people think of AI, they think of large language models like ChatGPT or generative art programs like DALL·E. Astronomers use different AI tools to study large datasets that would take excessive amounts of time to examine manually. These machine-learning algorithms, or MLs, are designed to take in data, analyze them using specified parameters researchers train them with from previous case studies, and sort the results into different categories. For example, astronomers use MLs to identify important features and difficult-to-detect patterns in surveys of the sky. But the limitations of these methods for sorting objects in space are not well understood.

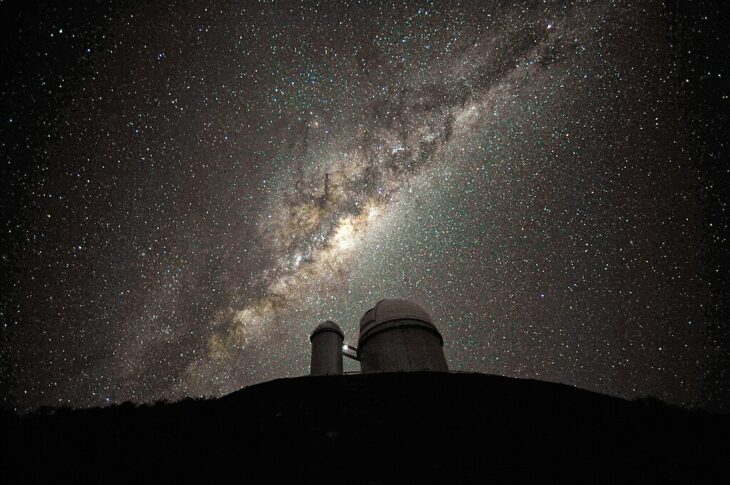

To determine these limitations, a team of scientists headed by Pamela Marchant Cortés at the University of La Serena in Chile tested the abilities of an ML developed by other researchers in 2021. The ML they tested used a combination of classification methods known as rotation-forest, random-forest, and LogitBoost to sort objects outside the Milky Way Galaxy into 3 broad categories based on their size, shape, brightness, and color. They classified objects that were small, dim single-points with varying colors as stars, objects that were large, bright, and extended over a portion of the sky as galaxies, and objects that were small, very bright single-points with blue color as quasi-stellar objects or quasars.

The team wanted to see whether this ML could accurately classify objects in the sky that they and other humans had previously classified manually. The catch in the study was that the unidentified objects the team wanted the ML to classify were located in a crowded region of the sky made hazy by dust in the Milky Way’s disk. Astronomers refer to this area as the zone of avoidance or ZoA. Marchant Cortés’s team explained that the ZoA is already a difficult region to study, but they made it even more difficult by restricting their ML to images taken with medium-wavelength light that is most obscured by dust, called near-infrared light. Typically, astronomers use MLs on images taken with a clear view of the target area with multiple different types of light, but these researchers tested how well the ML performed under poor conditions with limited reference material.

Marchant Cortés’s team manually identified 15,423 objects in a specific Galactic disk region using short-wavelength X-ray images taken of the same sections of the sky as the data their ML analyzed in near-infrared. X-rays provide clearer images of objects behind the Milky Way as they penetrate dust the same way they penetrate human skin and muscle when a doctor takes an X-ray image. So the scientists and their ML each studied images of the same places taken through different filters designed to make prominent features clearer and more obscured, respectively. The team classified 1,666 of these objects as stars, 9,726 as galaxies, and 4,031 as quasars.

Using statistical analysis, the researchers showed that they identified about 5,000 of the almost 10,000 galaxies correctly in terms of their classification and position in the sky. They compared how many of these galaxies they could identify versus the ML because galaxies are large and have unique shapes that should be easier to distinguish from background or foreground objects. The investigators found their ML failed to identify as many objects as the humans did correctly. Of the nearly 5,000 galaxies the team identified with certainty, their ML correctly found only 4 galaxies in the same positions, for a success rate of 0.08%. Additionally, the ML failed to notice anything at all for 6,497 of the 15,423 total objects the team identified, for a failure rate of over 40%.

Marchant Cortés’s team concluded that consistently sorting ZoA objects into categories is beyond the current ability of standard AI methods in astronomy. They anticipated some of these difficulties arose because the ML’s original creators designed it to compare X-rays, optical light humans can see, and near-infrared images rather than to use near-infrared images alone. The team suggested that one day these tools could help astronomers map out the universe’s hard-to-see regions. However, they recommended future scientists train their AIs on diverse and representative sample sets that include comparisons across several wavelength ranges of light to improve their accuracy.