What started out as a study of ethical decision making for self-driving cars turned into a study of human psychology. How do we decide, when a collision is inevitable, who or what to hit? No one wants to be confronted with a life and death decision, yet in driving scenarios, it is common. The invention of self driving cars has pushed this uncomfortable issue into the limelight.

Take, for example, the Trolley Problem. The Trolley Problem is a common philosophical exercise where a person is given the choice to flip a switch on a track that would send a train careening into either one person or five people. What a person chooses is often a clue into their ethics. How do they value life?

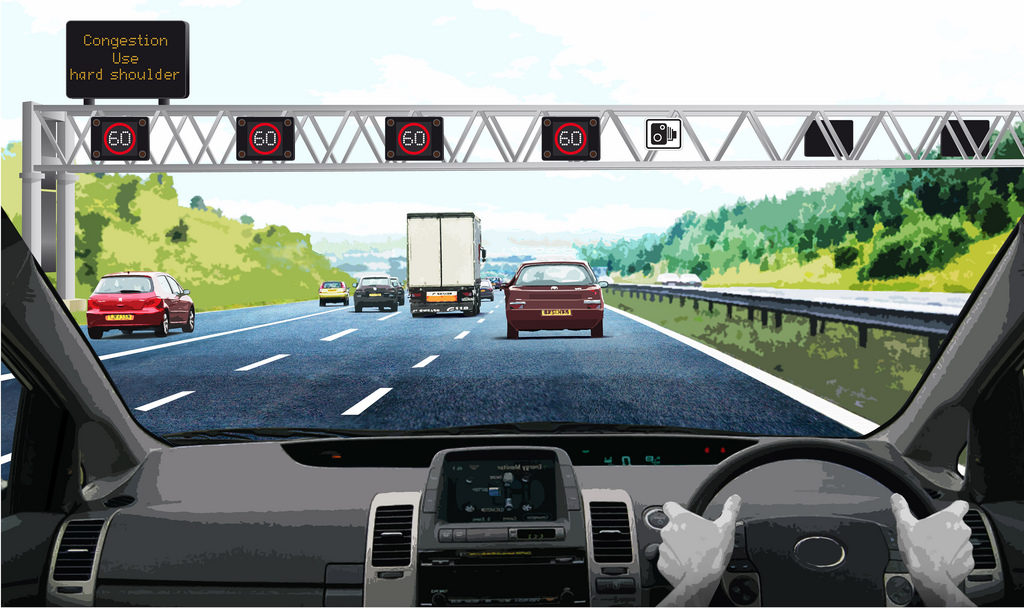

A self-driving car will have to make difficult decisions like this as well, but programming cars to make these decisions is difficult due to the context of each potential situation. When the car approaches two obstacles, and hitting one of them is inevitable, how do we program them to choose? It becomes even more difficult to program cars to make decisions when it is expected that they will share the road with less predictable human drivers.

Researchers at the Institute of Cognitive Science of Osnabrück University in Osnabrück, Germany say that self-driving cars should be programmed to handle these ethical decisions in a way that is similar to what a human would do if they are to share the road with us. They set up a series of virtual reality experiments in order to model human decision making in this context, collecting mathematical data that could then be applied to self-driving car algorithms.

105 adults, 76 male and 29 female, between the ages of 18 and 60 with an average age of 31 were selected for the study. The participants drove a virtual car on a two-lane road. At a certain point, fog would appear in their view. When the fog settled, two obstacles would emerge – one in each lane. The driver would have to choose which object to hit. The objects included humans, animals, inanimate objects, and combinations of those. The time allowed to make the decision was also varied. Participants had between one and four seconds to decide who or what to hit.

Some scenarios included an empty lane as a control, which was important in determining the number of errors the driver typically makes. It was assumed that players would intentionally go toward the empty lane when given the choice, unless they made a mistake. This “error rate” would be reflective of the driver’s skill and could be applied to all of the data, making it more accurate.

The study produced some alarming results. In 80% of the trials, participants were more likely to hit male adults than female adults. Somewhat less reliable data in the study hinted that children are prioritized over adults, and common pets may be prioritized over other animals. These results give a very simplistic view of the decision making process, however, since the study did not take into account how people might react in scenarios where the level of injury to the obstacle varied.

Another informative finding is that the decisions people made became more random when the time to make the decision was decreased. The authors claim that this makes sense according to previous research. The sacrificing of male adults over females, for example, was much less prevalent in cases where the driver only had one second to make the decision versus the four seconds.

Though further development is most assuredly needed, the authors propose that this data could be used for a simple model for programming self-driving cars. Rather than trying to simulate the immense complexity of a human brain’s neural networks using computer algorithms, a simpler “value of life” ethical model could be imposed. Collecting data on what humans would actually do in collision scenarios also shows us some uncomfortable realities of our ethics, providing opportunities for growth in both human beings and automated cars.